AI Accelerates Scientific Innovation: DeepMind’s 36-Page Report Highlights a Revolutionary Era for Global Laboratories

Artificial Intelligence (AI) is transforming the world of scientific research, ushering in a new golden age of discovery. According to OpenAI scientist Jason Wei, the focus of AI development is shifting from consumer applications to scientific breakthroughs, a transition expected to gain momentum within the next year.

11/27/202411 min read

Over the past two years, AI has focused on driving user growth, successfully achieving widespread adoption. After all, attracting new users is the cornerstone of business success.

However, the everyday applications of AI are now reaching a saturation point. For the majority of common queries, many large language models (LLMs) can already provide reasonably accurate and satisfactory responses.

The speed and fluency of these models are sufficient to meet the needs of most users. Even with further optimization, the potential for improvement is limited, as the technical complexity of these tasks is relatively low.

Looking ahead, the real opportunities may lie in the fields of science and engineering.

Recently, OpenAI scientist Jason Wei shared a prediction: in the coming year, the focus of AI development could shift from everyday usage to advancements in scientific research.

He believes that in the next five years, AI will shift its focus to tackling more advanced challenges—leveraging AI to accelerate progress in science and engineering. These fields are the true engines driving technological breakthroughs.

For simple queries from everyday users, the potential for improvement is minimal. However, in every cutting-edge scientific domain, there is immense room for advancement. AI is uniquely positioned to address the “top 1% of problems” that can propel technological leaps forward.

AI not only has the potential to solve these critical problems but also inspires people to take on even greater challenges. Moreover, AI’s progress creates a positive feedback loop, accelerating research in AI itself and helping it become even more powerful. This compounding effect makes AI the ultimate force for exponential growth.

In short, the next five years will mark the era of the "AI scientist" and the "AI engineer."

A recent paper from DeepMind highlights this trend: laboratories worldwide are witnessing exponential growth in the adoption of AI, as scientists increasingly rely on it to push the boundaries of discovery and innovation.

The Golden Age of AI-Powered Scientific Discovery

We are entering a transformative era where artificial intelligence is accelerating scientific innovation like never before. Today, one in three postdoctoral researchers leverages large language models (LLMs) to assist with tasks such as literature reviews, programming, and academic writing, revolutionizing how research is conducted.

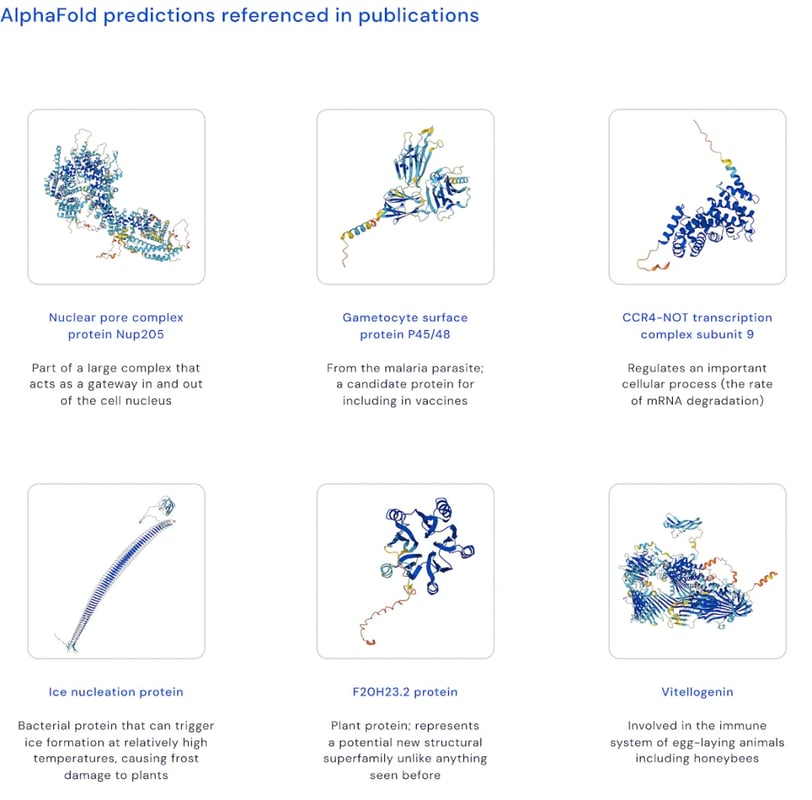

This year’s Nobel Prize in Chemistry was a groundbreaking moment, awarded to Demis Hassabis and John Jumper, the inventors of AlphaFold 2. Their work not only astonished the scientific community but also inspired countless researchers to integrate AI into their respective fields, unlocking new avenues for innovation and discovery.

Despite the number of scientists growing more than sevenfold in the past half-century in the U.S. alone, the pace of technological progress has slowed. One key reason is the increasing scale and complexity of modern scientific challenges.

AI, particularly deep learning, excels in unraveling such complexity while dramatically reducing the time and cost of scientific discovery. For instance, traditional X-ray crystallography could take years and cost over $100,000 to determine the structure of a single protein. In contrast, AlphaFold delivers predictions for over 200 million proteins—instantly and for free—rendering conventional methods obsolete.

The integration of AI into science marks the dawn of a new golden age, where the convergence of human expertise and machine intelligence propels discovery to unprecedented heights.

Five Opportunities to Break Through Scientific Bottlenecks

1. Knowledge: Transforming Access and Dissemination

To drive innovation, scientists must navigate an ever-expanding, highly specialized body of knowledge. This growing "knowledge burden" increasingly favors interdisciplinary teams at elite universities, leaving smaller research groups less competitive.

Additionally, scientific findings are often confined to complex, English-language papers that limit engagement from policymakers, businesses, and the public. AI, particularly large language models (LLMs), is changing this landscape.

For example, research teams can use tools like Google Gemini to extract insights from 200,000 papers in a single day. LLMs empower both scientists and the public by simplifying complex academic content, enabling users to summarize and interact with cutting-edge knowledge in ways that close the gap between experts and laypersons.

2. Data: Generating, Extracting, and Annotating Large Scientific Datasets

While we live in an era of data abundance, critical scientific domains like soil science, deep-sea exploration, atmospheric research, and informal economies still suffer from data scarcity. AI offers solutions to bridge these gaps by reducing noise and errors in processes like DNA sequencing, cell-type identification, and animal sound analysis.

The multimodal capabilities of LLMs can transform unstructured scientific resources—publications, archival documents, and video footage—into structured datasets for further research. AI can also annotate auxiliary information for scientific data. For instance, many microbial proteins lack detailed annotations regarding their functions.

Synthetic data creation is another frontier. Models like AlphaProteo generate novel protein designs, trained on over 100 million AI-predicted structures and experimentally validated protein databases. This opens new possibilities for experimental and computational science.

3. Experimentation: Simulating, Accelerating, and Guiding Complex Experiments

Scientific experiments are often cost-prohibitive, logistically complex, or constrained by limited access to facilities, resources, or materials. AI can simulate these experiments, drastically reducing costs and timelines.

For example, reinforcement learning agents can simulate and optimize physical systems like plasma in tokamak reactors, a critical step toward nuclear fusion—a potential game-changer for limitless, emission-free energy. Similar approaches can accelerate innovations in particle accelerators, telescopes, and other large-scale infrastructure.

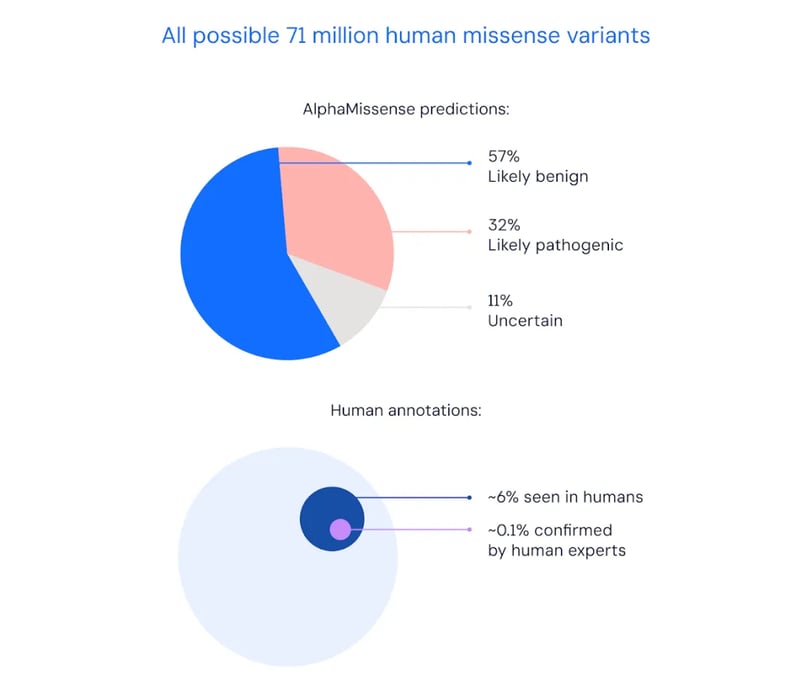

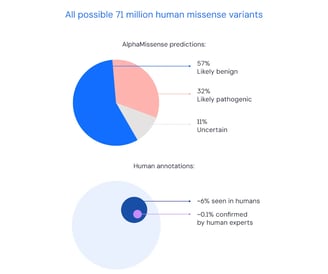

AI not only speeds up experiments but also guides real-world tests. In genomics, for example, AlphaMissense can classify 89% of 71 million potential gene variants, helping scientists focus on high-risk mutations and accelerate disease research.

1. Problem Selection

The cornerstone of scientific progress lies in identifying problems that truly matter. DeepMind emphasizes targeting "root problems"—foundational scientific challenges like protein structure prediction or quantum chemistry—capable of unlocking unprecedented avenues for research and application.

A well-chosen problem typically possesses three characteristics:

A vast combinatorial search space.

Abundant data availability.

Clear, measurable objective functions.

For example, breakthroughs like DeepMind's advancements in nuclear fusion stem from tackling high-impact challenges using cutting-edge AI methodologies, such as the maximum a posteriori policy optimization (MAPPO) algorithm.

Additionally, the difficulty of the problem must be calibrated to yield intermediate results, facilitating iterative improvement. Striking this balance requires a blend of intuition and experimentation.

2. Model Evaluation

Evaluating AI models in scientific research demands tailored methodologies. Beyond standard metrics and benchmarks, comprehensive evaluation strategies can foster innovation and spark interest in scientific challenges.

For instance, DeepMind's weather forecasting team transitioned from evaluating a few critical variables to a more holistic approach, using over 1,300 metrics inspired by the European Centre for Medium-Range Weather Forecasts (ECMWF). They also assessed downstream utility, such as predicting cyclonic paths and atmospheric river intensity.

Community-driven initiatives like the CASP protein structure prediction competition exemplify impactful evaluation frameworks. However, such benchmarks must evolve regularly to mitigate risks like data leakage and maintain their effectiveness as progress indicators.

3. Computational Resources

Computation drives both AI and scientific breakthroughs, but it also raises sustainability concerns. Striking a balance between computational demands and efficiency improvements is essential.

For example:

Compact and efficient models like those used for protein design consume fewer resources.

Large language models, while computationally intensive during training, can be optimized through fine-tuning and distillation to reduce inference costs.

Beyond resource availability, fostering critical infrastructure and engineering expertise ensures system reliability, particularly in academia and public research institutions, which often face resource constraints.

4. Data as Infrastructure

High-quality, curated datasets are indispensable for advancing AI in science. Success stories like the Materials Project and gnomAD dataset highlight the transformative impact of visionary initiatives on scientific AI.

However, significant challenges persist:

Experimental data often lacks funding for long-term preservation.

Disparate standards complicate integration and utilization, as seen with genomic datasets requiring extensive preprocessing.

Unlocking the potential of underutilized datasets, such as historical fusion experiment data or biodiversity records restricted by licensing, requires overcoming logistical and resource-related barriers.

5. Organizational Design

The ideal organizational model strikes a balance between academic freedom and industrial coordination. Renowned laboratories like Bell Labs and Xerox PARC exemplified this equilibrium, serving as inspiration for institutions like DeepMind.

Emerging scientific organizations aim to replicate this model, focusing on high-risk, high-reward research beyond the scope of traditional academia or profit-driven industry. By blending top-down goals with bottom-up creativity, these institutions empower scientists to pursue ambitious projects with the necessary support and autonomy.

Mastering the art of shifting between exploratory and exploitative phases within research projects, as practiced at DeepMind, further enhances organizational effectiveness.

6. Interdisciplinary Collaboration

Deep integration across disciplines is critical to solving complex scientific problems. Effective collaboration requires more than assembling diverse experts—it involves fostering shared methodologies and ideas.

For instance, DeepMind's Ithaca project, which used AI to restore ancient Greek inscriptions, succeeded by blending AI expertise with epigraphy. Such breakthroughs hinge on aligning team dynamics, prioritizing problem-solving over individual recognition, and cultivating curiosity-driven, respectful, and debate-friendly cultures.

Organizations can promote interdisciplinary synergies through dedicated roles, regular exchanges, and career pathways that span multiple fields.

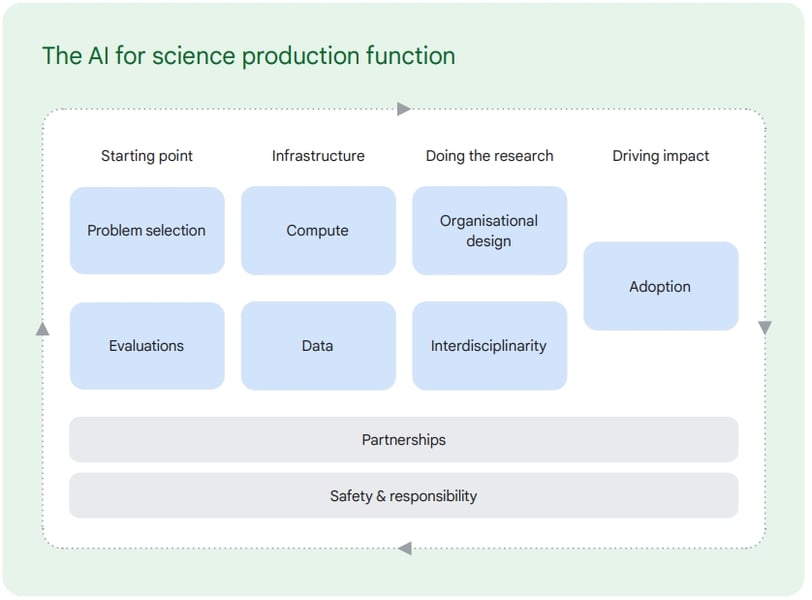

Key Elements of AI for Science

This section delves into the pivotal factors driving the realization of "AI for Science," encapsulated in a comprehensive model known as the AI for Science Production Function.

This model illustrates how AI can facilitate various stages of scientific research and innovation, emphasizing critical aspects such as:

Problem Selection: Identifying the most impactful scientific challenges.

Evaluation: Establishing effective benchmarks for assessing AI's contribution.

Infrastructure: Leveraging advanced computational resources and robust datasets.

Organizational Design: Structuring interdisciplinary collaborations effectively.

Adoption: Translating research outcomes into actionable applications.

Partnerships and Safety & Responsibility serve as the foundational pillars, ensuring the entire process is efficient, ethical, and sustainable. While many of these elements may seem intuitive, DeepMind's work has unearthed valuable insights and lessons through practical implementation.

Far from replacing traditional methods, AI enriches them. Generative AI can simulate dynamic interactions in systems like socioeconomic models, where agents (e.g., companies or consumers) learn and adapt to changing scenarios. Reinforcement learning further enhances this process by refining agents’ behavior in response to new variables, such as energy prices or pandemic policies.

5. Solutions: Navigating Vast Search Spaces

Many scientific problems involve navigating an almost incomprehensible array of potential solutions. For example, designing a small-molecule drug involves exploring more than 10^60 possibilities, while creating a protein with 400 amino acids requires addressing 20^400 combinations.

Traditional methods rely on intuition, trial and error, or brute-force computation—approaches that fail to fully explore these enormous search spaces. AI, however, excels at pinpointing viable solutions efficiently.

In July, AlphaProof and AlphaGeometry2 solved four of six problems in the International Mathematical Olympiad by generating potential solutions using Gemini LLMs. These models iteratively refined their results, combining AI-generated candidates with logic-based reasoning to identify the most likely correct solutions.

Human-AI Collaboration: The Future of Science

While AI systems continue to improve, their greatest value lies in addressing bottlenecks that hinder scientific progress rather than automating tasks where human expertise excels. AI’s ability to process vast datasets, uncover insights, and navigate complex systems complements human creativity and critical thinking.

Science, unlike many industries, has virtually unlimited demand. Every breakthrough reveals new frontiers, creating opportunities for further discovery. As AI transforms the economics of research, it expands the need for scientists to explore uncharted domains.

As Herbert Simon envisioned, AI itself becomes a subject of scientific inquiry. Researchers will play a crucial role in evaluating and refining AI systems while developing innovative frameworks for human-AI collaboration. Together, they will redefine the boundaries of science.

4. Modeling: Understanding Complex Systems and Interactions

In 1960, physicist Eugene Wigner described the "unreasonable effectiveness" of mathematics in modeling natural phenomena like planetary motion. However, traditional mathematical models fall short when applied to complex systems like biology, economics, and weather due to their dynamic, stochastic, and emergent properties.

AI, by contrast, excels at extracting patterns from complex data. Google’s deep learning models, for instance, outperform traditional numerical models in predicting 10-day weather forecasts, achieving superior speed and accuracy. AI is also instrumental in climate science, predicting phenomena such as condensation trails that exacerbate global warming.

7. Adoption and Real-World Impact

Scientific AI tools such as AlphaFold are both specialized and versatile: they focus on a limited set of tasks but serve a broad range of scientific fields, from disease research to improving fisheries. However, translating scientific advancements into real-world applications is not straightforward. For example, the germ theory of disease took a long time to be widely accepted after it was first proposed. Similarly, downstream products resulting from scientific breakthroughs (such as new antibiotics) often fail to reach full development due to a lack of appropriate market incentives.

To promote the real-world application of models, we aim to strike a balance between scientists' adoption, commercial objectives, and safety risks. We have established a dedicated Impact Accelerator to accelerate the practical implementation of research and encourage collaborations with a focus on societal benefits.

For scientists to easily adopt new tools, the integration process must be straightforward. In the development of AlphaFold 2, we not only open-sourced the code but also partnered with EMBL-EBI to create a database, allowing scientists with limited computational resources to easily access the structures of over 200 million proteins.

AlphaFold 3 further extends the tool's functionality, but with a surge in demand for predictions, we launched the AlphaFold Server, enabling scientists to generate protein structures on demand.

At the same time, the scientific community has also self-organized to develop tools like ColabFold, reflecting the growing attention to diverse needs and the importance of enhancing computational capabilities within the scientific field.

Scientists will only use AI models they trust. The key to promoting adoption lies in clearly defining the model’s purpose and limitations.

For example, in the development of AlphaFold, we designed an uncertainty metric to visually indicate the model’s confidence in its predictions. Additionally, in collaboration with EMBL-EBI, we launched training modules that guide users on how to interpret confidence levels and strengthen trust through practical case studies.

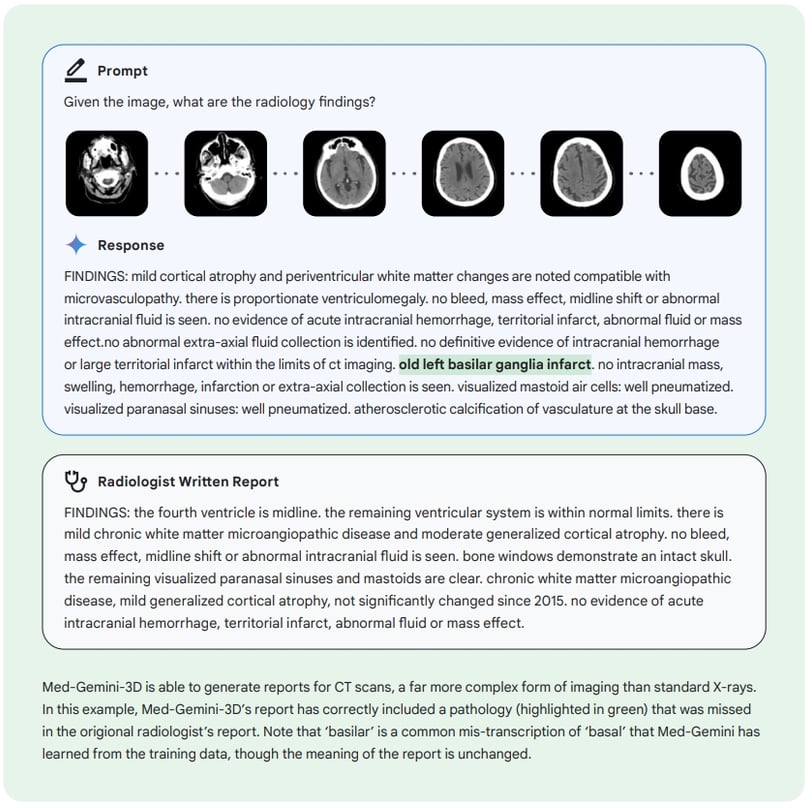

Similarly, the Med-Gemini system excels in health-related question answering. It generates multiple reasoning chains to assess answer discrepancies and compute uncertainty. When uncertainty is high, it automatically triggers a web search to integrate the latest information.

This approach not only enhances reliability but also provides transparency in the decision-making process, significantly boosting scientists’ trust in the model.

8. Collaboration

Scientific AI relies heavily on interdisciplinary collaboration, with partnerships between the public and private sectors being especially crucial.

From dataset creation to the sharing of outcomes, such collaboration is woven throughout the entire project lifecycle. For instance, the feasibility of new materials designed using AI models requires evaluation by seasoned materials scientists; similarly, the proteins designed by DeepMind to combat SARS-CoV-2 must be validated through wet-lab experiments in partnership with the Crick Institute to confirm their ability to bind the target as expected. Even in mathematics, the success of FunSearch in solving the Cap Set problem benefited from the expertise of mathematician Jordan Ellenberg.

Given the pivotal role industrial labs play in advancing AI development and the need for deep domain expertise, public-private partnerships will become increasingly vital in driving the cutting edge of scientific AI. To support this, more funding should be allocated to joint teams formed by universities, research institutions, and industry.

However, collaboration is not straightforward. All parties must align early on key issues: intellectual property rights, decisions on whether to publish papers, whether data and models will be open-sourced, and the applicable licensing agreements, among others. These issues often reflect differing incentives between stakeholders, but successful collaborations are typically built on clear mutual value exchanges.

For example, the AlphaFold protein database, which serves over 2 million users, owes much of its success to the combination of our AI model with EMBL-EBI’s expertise in biological data management. This complementary partnership is not only efficient but also maximizes the potential of AI.